EvalsOne Unveils Cutting-Edge Platform for Evaluating GenAI Applications

EvalsOne is designed to help developers and researchers unlock the full potential of GenAI applications.

LONDON, LONDON, UNITED KINGDOM, June 11, 2024 /EINPresswire.com/ -- EvalsOne is pleased to announce the launch of its enhanced platform, designed to empower developers, researchers, and AI professionals in unlocking the full potential of generative AI. As the development of AI models advances rapidly, ensuring application reliability and peak performance has become increasingly complex. EvalsOne offers a comprehensive solution to these challenges, providing the tools and insights needed to streamline the evaluation process and ensure superior quality of generative AI applications at every stage of development.Key Features and Benefits of EvalsOne:

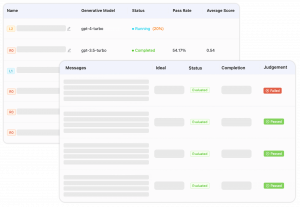

Intuitive Design and Automated Insights: EvalsOne simplifies the evaluation process with an intuitive user interface that requires no coding experience. The platform provides automated insights, making it easier for users to identify areas for improvement and optimize their models effectively.

Innovative "Fork" Feature: One of the standout features of EvalsOne is its innovative fork feature. This allows users to compare different models and prompts easily, facilitating A/B testing and enabling rapid iterations. By forking different versions, users can identify the best-performing configurations and enhance their AI applications more efficiently.

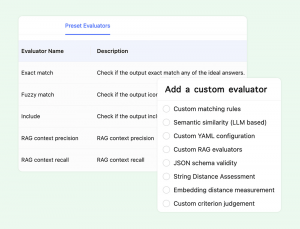

Comprehensive Evaluators and Metrics: EvalsOne offers a variety of evaluators and metrics that cater to different evaluation needs. Users can choose from pre-set industry-leading metrics or create tailored assessments to suit specific requirements. This flexibility ensures that evaluations are precise and aligned with the unique goals of each project.

Seamless Integration with Cloud Services and Local Models: The platform supports seamless integration with various cloud services, local models, orchestration tools, and AI bot APIs. This versatility allows users to connect their preferred tools and services, creating a cohesive and efficient workflow for AI development and evaluation.

LLM Prompt Crafting and Refinement: EvalsOne facilitates the crafting and refining of large language model (LLM) prompts. Users can test and optimize prompts, ensuring that their AI applications deliver accurate and reliable outputs.

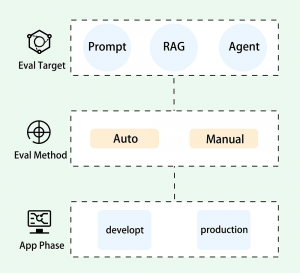

Streamlined Testing and Integration: EvalsOne streamlines the testing and integration of models into workflows. The platform supports end-to-end testing of agents, evaluating every aspect of the workflow, including the integration of human evaluation seamlessly. This comprehensive approach ensures that AI models are robust and reliable before deployment.

Advanced Evaluation Metrics and Methodologies: Innovate with advanced evaluation metrics and methodologies. EvalsOne integrates multiple industry-leading metrics and allows for the creation of personalized metrics compatible with industry standards. This ensures that users can conduct precise and comprehensive assessments, even in complex scenarios.

Commitment to Quality and User Experience:

EvalsOne is committed to making high-quality AI app evaluation accessible and user-friendly. Emphasizing enterprise-grade stability and a user-centric design, EvalsOne enhances efficiency, refines processes, and instills confidence in AI creations. The platform's multi-threaded operations and robust privacy measures ensure that evaluations are conducted securely and efficiently, meeting the rigorous demands of enterprise AI development.

Supporting the AI Community:

EvalsOne is dedicated to providing an advanced platform for the evaluation of generative AI models, simplifying and enhancing the development process for AI professionals. With a focus on accessibility, stability, and user-friendliness, EvalsOne aspires to be a leader in AI evaluation solutions. The platform's versatile and comprehensive tools are designed to support a wide range of users, including prompt engineers, RAG app builders, GenAI developers, and researchers.

Prompt Engineers: Develop and test LLM prompts, perform stability testing, and conduct A/B tests with ease.

RAG App Builders: Prepare datasets, integrate with RAG pipelines, and receive improvement recommendations to optimize applications.

GenAI Developers: Compare local models with LLMs, integrate with source code, and create custom evaluators to fit specific needs.

Researchers: Explore new metrics, conduct extensive model testing, and develop cutting-edge evaluation techniques.

Join Us in Revolutionizing AI Evaluation:

At EvalsOne, we believe in pushing the boundaries of AI evaluation. Our goal is to empower AI professionals with reliable, innovative, and user-friendly tools that enhance the quality and performance of their AI models. By providing a comprehensive and flexible evaluation platform, we help our clients gain a competitive edge in the rapidly evolving AI landscape.

Discover how EvalsOne can transform your AI projects today. Visit us at evalsone.com and follow us on LinkedIn for the latest updates and insights.

Robert

EvalsOne

email us here

Visit us on social media:

X

LinkedIn

YouTube

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.